We had a tremendous turn out of the open science working group and other open scientists to the Open Knowledge Festival 2014 in Berlin 15-17 July 2014.

Initial planning took place via the Open Science working group wiki so you can see a list of attendees there.

The following is a summary of the OKFest open science sessions, with updates posted as new content is sent in via comments, @okfnscience or science@okfn.org. Enjoy and do contribute to the page and linked etherpads/wikis where you find something of interest!

Open Access Global Review

Introduction to Text and Data Mining (TDM)

Exploding Open Science! Awareness, training, funding

Reimagining Scholarly Communication

Skills and Tools for Web Native Open Science

Testing the efficiency of open versus traditional science #ovcs

Open Science meets Open Education meets Open Development – working better together

Open Data and the Panton Principles for the Humanities. How do we go about that?

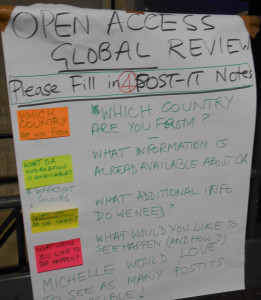

Open Access Global Review #OKFestOA

>

Sched Entry

Etherpad

Storify

Organised by:

Michelle Brook, who unfortunately could not join us on the day, therefore this session was co-facilitated by Joe McArthur (Open Access Button/Right to Research Coalition), Cameron Neylon (PLOS), Jenny Molloy (OK Open Science WG), Peter Murray-Rust (OK Open Science WG/ContentMine).

It would have been fantastic to give a global review of open access publishing in an hour but this was simply not possible due to the lack of detailed data on scholarly publishing and open access currently available. Joe McArthur introduced this problem and the session then took an active approach to discovering what data is needed and what steps can be taken to ensure that we can deliver a global review by the next OKFest. Cameron Neylon navigated us through the large amount of material gathered from participants on what information was available in their country, what information is required and how we could go about obtaining it. Our OA world map soon started to fill up!

Regional groups then met to move things forward and you can read their full notes on the etherpad, but the crucial outcomes were five separate project suggestions:

- Open Access Census – reworking the Open Data Census for Open Access information [wiki]

- Create or reuse a platform for requesting data on the cost of subscription journals or open access publication to universities. This project should encourage centralised submission of requests and make using FOI laws simple where necessary as well as providing assistance with wording etc. [wiki]

- Measuring the efficiency of open access: How can we demonstrate the benefits of different licenses [wiki]

- Work towards making open access policies machine readable [wiki]

Cameron Neylon caused a buzz at the end of the session by offering $1000 each to the top three open access projects tweeted to #OKFestOA – let’s hope to see some great suggestions! All of the projects above are linked to a wiki section for further planning so dig in and edit as you see fit.

Introduction to Text and Data Mining (TDM) #TDM

>

Sched Entry

Etherpad

Storify

Organised by:

Open Science Open Knowledge Working Group, Creative Commons and ContentMine. Led by Peter Murray-Rust, Ross Mounce and Puneet Kishor

Peter Murray-Rust kicked off this exploration of the practical uses and legal considerations of content mining with a presentation on its potential to liberate facts from the scientific literature.

#TDM and glitter balls at #OKFest14

Participants then marked up a paper to explore the difficulties in identifying facts that a machine might face and which ContentMine software aims to overcome. Despite the massive potential of text and data (or content) mining to benefit research, deployment of the available technology is held back by copyright and contract law governing access to and reuse of the scientific literature.

Puneet Kishor delivered a detailed and illuminating presentation on the legal implications of content mining covering the current copyright law in various jurisdictions, fair use and the case law which informs its definition in the US as well as recent developments in copyright exemptions for content mining as recently enacted by the UK government. The group then split into coders and practitioners, with coders attempting to install and run the software while other discussed potential interesting applications and research questions which content mining could help to answer. If you had access to the whole corpus of scientific literature (or other dataset of interest) and the ability to mine facts like chemical structures, phylogenetic trees, species names, geolocations, dates and other entities – what questions would you ask? Responses included:

- Following campaigns or politicians on social media to discover which issues are getting attention.

- Tracking authors/licenses/institutions/funders in the scientific literature to answer questions such as who is publishing OA? What license is being used (Numbers/trends)? Where are authors in a particular discipline and who do they collbaorate with?

- Tracking changes in language usage through texts over time.

- Analysis of (local) government press releases by political scientists.

- Better search tools for academics.

- Liberating phylogenetic tree data (Ross Mounce and the PLUTo project)

- Linking chemical structures and species through phylogeny.

Part of the ContentMine team by a kangaroo in the Museum für Naturkunde in homage to our AMI software mascot.

If you can think of more, please tweet them to us on @TheContentMine.

For those who wished to get more hands-on, ContentMine ran a whole half-day workshop on Friday 18 July at the Museum für Naturkunde Berlin where scrapers and text mining software were deployed to extract occurence of species names from scientific papers. In the afternoon, a mini-hackathon led to the creation of new scrapers and more code for the project.

Exploding Open Science! Awareness, training, funding

Organised by:

Alexandre Hannud Abdo, Stefan Kasberger and Tom Olijhoek

Session summary coming soon….

The intention of this session is to discuss the development of open science through awareness, training and funding.

Expanding the adoption of Open Science, in its many trends, requires multiple simultaneous forces. Beyond intrinsic forces that may have all sorts of sources, there are three interaction forces that seem essential for percolation: awareness, training and funding. Of those, we could say that awareness has been much discussed and reasonably acted upon, training has been reasonably discussed but not acted accordingly, and funding has been seen as both a short or long term goal depending on the trend you’re considering. This workshop intends to probe how we’re collectively balancing our efforts among these forces, where have we been great, where could we be doing better, and where must we do better in the future.

In particular, we’ll devote ourselves to discuss concrete actions in training for open science, which is likely the force with highest yields that we can autonomously achieve, yet seems to be one we’re having a hard time to undertake. Why? Who’s been doing it right? How do we multiply that?

Reimagining scholarly communication

>

Sched Entry

Etherpad

Storify

Organised by:

Stuart Lawson

Session summary coming soon….

The current scholarly communication system, largely built around publication in academic journals, is seriously flawed – too much knowledge is locked away behind paywalls and only accessible to those who can afford it. The open access movement has made great strides in opening up the world’s knowledge by creating open access journals and repositories. Could we do even better, and reimagine scholarly communication from the ground up to create a new system, with both the infrastructure and content liberated from commercial interest and open to everyone? In this session we will discuss what is already being done to achieve this and think about concrete actions to make it a reality.

Skills and Tools for Web Native Open Science

Organised by:

Sophie Kay (unfortunately unable to be in Berlin) and Kartik Ram

Session summary coming soon….

Research is becoming increasingly data intensive and computation driven across the board, from the life sciences to physics. There is also added pressure from funders and publishers for researchers to better document and share the outputs of their work, but despite a push for better practice, we’re still facing a gap between what computational skills (analysis, versioning, data management) researchers are expected to know and what they’re being taught at the university level.

There are a number of training programs out there (Software Carpentry, rOpenSci, Open Science Training Initiative, School of Data) working to provide additional support to researchers. But how do those skills map into the vision for more open, reproducible science? This session will explore both, providing hands on training and examples as well as fostering a discussion about how these skills lead to the vision of open research we’re all striving for.

Testing the efficiency of open versus traditional science #ovcs

>

Sched Entry

Etherpad

Blog post

Storify

Organised by:

Alexandre Hannud Abdo, Daniel Mietchen, Jenny Molloy

This session asked how can we test one proposed benefit of open science – increased efficency in terms of time and resources spent achieving a research objective. This research question is also one that was discussed during the IDRC funded project ‘Open and Collaborative Science in the Global South: Towards a Southern-led Research Agenda’ and the working paper arising from that project is open for comment online. However, this session wanted to move beyond the question to construct a way of answering it. The session split into three main groups to discuss the topic, tackling three areas:

- In which contexts could we study open science? [Focus on research subjects/communities]

- How could we fund research into open science vs traditional science? [Practical focus]

- How do we study efficiency of open science? [Methodological focus]

The full notes of discussions are available on the etherpad but it is clear that the challenges in research methodology and study design will take a lot of deep thought and consideration, particularly in light of the inherent variability in the outcomes of research projects. Some take away messages from the discussions were:

Moving forward with this endeavour, Daniel will be taking what we learned at OKFest14 to the Open Science, Open Issues conference in Brazil in August, to refine ideas during a whole day of open grant writing with the aim of getting nearer to a project proposal that could be submitted for funding. Watch this space and do add your name to the pad as we need all the help and domain expertise we can get to try and answer this important question and provide evidence for the effect of openness on one aspect of doing science.

Open Science meets Open Education meets Open Development – working better together

Led by:

Alexandre Hannud Abdo, Jenny Molloy, Tom Olijhoek, Raniere Silva, Marieke Guy

A small delegation from the open science fringe event travelled to Wikimedia DE and joined the Open Development fringe participants to discuss fostering inter working group collaborations and where we could best help each other. The small discussion group came up with three areas for increased cross-fertilisation:

- Open Education and Open Science could work together on how open methods of knowledge production could be taught and training for educators.

- Open Education would like greater interaction with the Open Development group regarding the types of educational data and educational resources that would be most helpful in their situations.

- Open Science would like feedback from Open Development practitioners on the types of scientific data that would be useful in their work, the types of research and community engagement that they consider necessary and how openness could play a role here. There could also be help in understanding openness in a development context and the challenges and questions that we are all approaching from our own disciplines and through our own activities.

Open Data and the Panton Principles for the Humanities. How do we go about that?

Organised by: Iain Emsley, facilitated by: Peter Kraker

The goal of this session is to devise a set of clear principles which describe what we mean by Open Data in the humanities, what these should contain and how to the use them.

Peter kicked off with a presentation to get everyone up to speed (see below). We first went go through definitions for open science and open data. Then, we looked at the Panton Principles for Open Data in Science and a proposed adaptation for the humanities.

Afterwards, we had an animated discussion on the issues of open data in the humanities (the discussion is summed up nicely in the Etherpad by Chris Kittel and David Dchtoo). This exchange gave us a number of problems with open data in the humanities to work with. Then, the participants got together into groups of 3 or 4 to come up with solutions to these problems. Finally, each group presented their solutions.

Problems with Open Data in the Humanities

Solutions for problems with Open Data in the Humanities

Great post! Here is another Open Science session from the festival:

Open Data and the Panton Principles for the Humanities. How do we go about that?

Sched entry: http://sched.co/1oRW5kv

Etherpad: https://pad.okfn.org/p/Panton_Principles_for_the_Humanities

Slides: slideshare.net/pkraker/opendata-humanities

Organized by Iain Emsley (Open Humanities Open Knowledge Working Group), facilitated by Peter Kraker (Know-Center/OKF Panton Fellow)

The goal of this session is to devise a set of clear principles which describe what we mean by Open Data in the humanities, what these should contain and how to the use them.

Peter kicked off with a presentation to get everyone up to speed (see slideshare.net/pkraker/opendata-humanities). We first went go through definitions for open science and open data. Then, we looked at the Panton Principles for Open Data in Science (see https://pantonprinciples.org) and a proposed adaptation for the humanities (see http://austgate.co.uk/2013/10/repost-of-principles-for-open-humanities-and-literature/).

Afterwards, we had an animated discussion on the issues of open data in the humanities (the discussion is summed up nicely in the Etherpad: https://pad.okfn.org/p/Panton_Principles_for_the_Humanities). This exchange gave us a number of problems with open data in the humanities (see http://i.imgur.com/GucCJaJ.jpg) to work with. Then, the participants got together into groups of 3 or 4 to come up with solutions to these problems. Finally, each group presented their solutions (see http://i.imgur.com/QuA62rA.jpg).