Improving openness, transparency and reproducibility in scientific research

This is a guest post for Open Access Week by Sara Bowman of the Open Science Framework.

Understanding reproducibility in science

Reproducibility is fundamental to the advancement of science. Unless experiments and findings in the literature can be reproduced by others in the field, the improvement of scientific theory is hindered. Scholarly publications disseminate scientific findings, and the process of peer review ensures that methods and findings are scrutinized prior to publication. Yet, recent reports indicate that many published findings cannot be reproduced. Across domains, from organic chemistry ((Trevor Laird, “Editorial Reproducibility of Results” Organic

Process Research and Development) to drug discovery (Asher Mullard, “Reliability

of New Drug Target Claims Called Into Question”

Nature Reviews Drug Development) to psychology (Meyer and Chabris, “Why Psychologists’ Food Fight Matters” Slate), scientists are discovering difficulties in replicating

published results.

Various groups have tried to uncover why results are unreliable or what characteristics make studies less reproducible (see John Ioannidis’s “Why Most Published Research Findings Are False,” PLoS, for example). Still others look for ways to incentivize practices that promote accuracy in scientific publishing (see Nosek, Spies, and Motyl, “Scientific Utopia II: Restructuring Incentives and Practices to Promote Truth Over Publishability” Perspectives on Psychological Science). In all of these, the underlying theme is the need for transparency surrounding the research process – in order to learn more about what makes research reproducible, we must know more about how the research was conducted

and how the analyses were performed. Data, code, and materials sharing can shed light on research design and analysis decisions that lead to reproducibility. Enabling and incentivizing these practices is the goal of The Open Science Framework, a free, open source web application built by the Center for Open Science.

The right tools for the job

The

Open Science Framework (OSF) helps researchers manage their research workflow and enables data and materials sharing both with collaborators and with the public. The philosophy behind the OSF is to meet researchers where they are, while providing an easy means for opening up their research if it’s desired or the time is right. Any project hosted on the OSF is private to collaborators by default, but making the materials open to the public is accomplished with a simple click of a button.

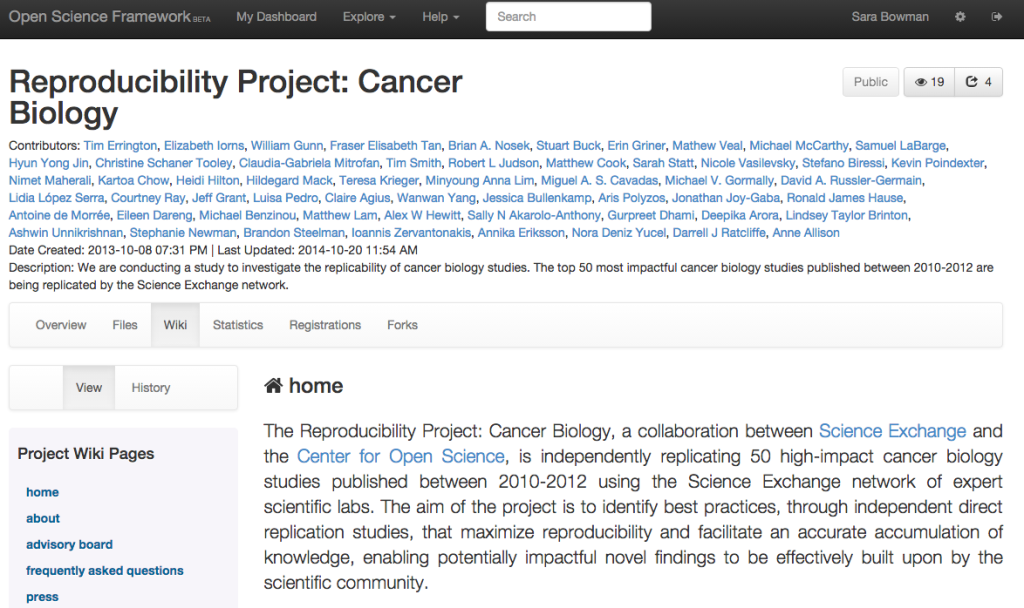

Here, the project page for the Reproducibility Project: Cancer Biology demonstrates the many features of the Open Science Framework (OSF). Managing contributors, uploading files, keeping track of progress and providing context on a wiki, and accessing view and download statistics are all available through the project page.

Features of the OSF facilitate transparency and good scientific practice

with minimal burden on the researcher. The OSF logs all actions by contributors and maintains full version control. Every time a new version of a file is uploaded to the OSF, the previous versions are

maintained so that a user can always go back to an old revision. The OSF performs logging and maintains version control without the researcher ever having to think about it – no added steps to the workflow, no extra record-keeping to deal with.

The OSF integrates with other services (e.g., GitHub, Dataverse, and Dropbox)

so that researchers continue to use the tools that are practical, helpful, and a part of the workflow, but gain value from the other features the OSF offers. An added benefit is in seeing materials from

a variety of services next to each other – code on GitHub and files on Dropbox or AmazonS3 appear next to each other on the OSF – streamlining research and analysis processes and improving workflows.

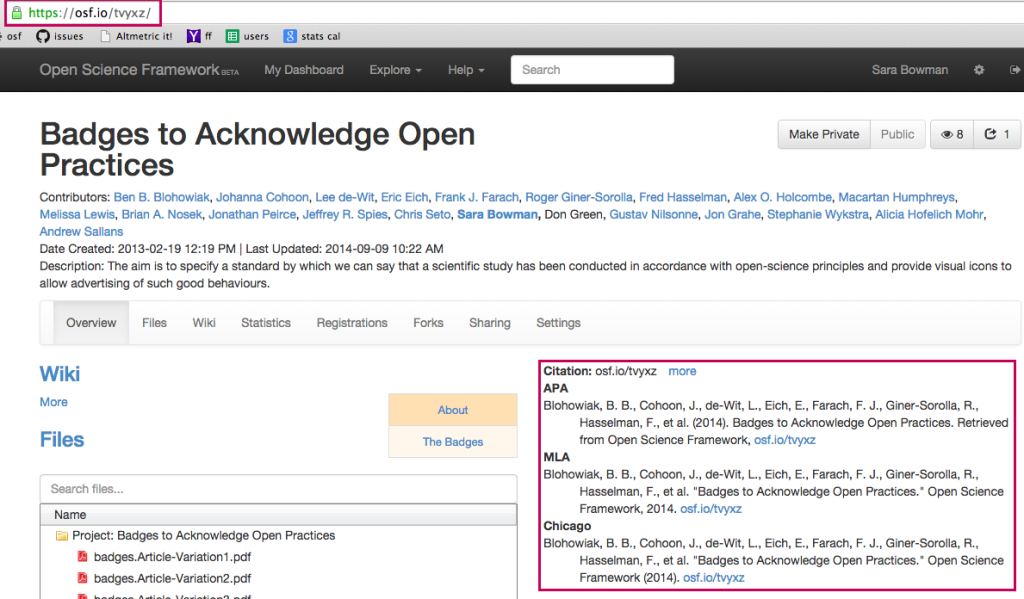

Each project, file, and user on the OSF has a persistent URL, making content citable. The project in this screenshot can be found at https://osf.io/tvyxz.

Other features of the OSF incentivize researchers to open up their data and materials. Each project, file, and user is given a globally unique identifier – making all materials citable and ensuring

researchers get credit for their work. Once materials are publicly available, the authors can access statistics detailing the number of views and downloads of their materials, as well as geographic

information about viewers. Additionally, the OSF applies the idea of “forks,” commonly used in open source software development, to scientific research. A user can create a fork of another project, to

indicate that the new work builds on the forked project or was inspired by the forked project. A fork serves as a functional citation; as the network of forks grows, the interconnectedness of a body of research becomes apparent.

Openness and transparency about the scientific process informs the development of best practices for reproducible research. The OSF seeks both to enable that transparency, by taking care of “behind

the scenes” logging and versioning without added burden on the researcher – and to improve overall efficiency for researchers and their daily workflows. By providing tools for researchers to

easily adopt more open practices, the Center for Open Science and the OSF seek to improve openness, transparency, and – ultimately – reproducibility in scientific research.

The Open Science Framework looks like a very interesting initiative and seems like an alternative to for example figshare with some very useful features. There is one thing I do not understand, though. Forking seems an inadequate mechanism when understood as a form of citation. Forks, at least how I know them from GitHub, only signal “inheritance” from one primary source project and in this way seem unable to reflect inspiration from or building upon multiple previous projects the way traditional citations can be used in literature.