All metrics are wrong, but some are useful

Altmetrics, web-based metrics for measuring research output, have recently received a lot of attention. Started only in 2010, altmetrics have become a phenomenon both in the scientific community and in the publishing world. This year alone, EBSCO acquired PLUM Analytics, Springer included Altmetric info into SpringerLink, and Scopus augmented articles with Mendeley readership statistics.

Altmetrics have a lot of potential. They are usually earlier available than citation-based metrics, allowing for an early evaluation of articles. With altmetrics, it also becomes possible to assess the many outcomes of research besides just the paper – meaning data, source code, presentations, blog posts etc.

One of the problems with the recent hype surrounding altmetrics, however, is that it leads some people to believe that altmetrics are somehow intrinsically better than citation-based metrics. They are, of course, not. In fact, if we just replace the impact factor with the some aggregate of altmetrics then we have gained nothing. Let me explain why.

The problem with metrics for evaluation

You might know this famous quote:

“All models are wrong, but some are useful” (George Box)

It refers to the fact that all models are a simplified view of the world. In order to be able to generalize phenomena, we must leave out some of the details. Thus, we can never explain a phenomenon in full with a model, but we might be able to explain the main characteristics of many phenomena that fall in the same category. The models that can do that are the useful ones.

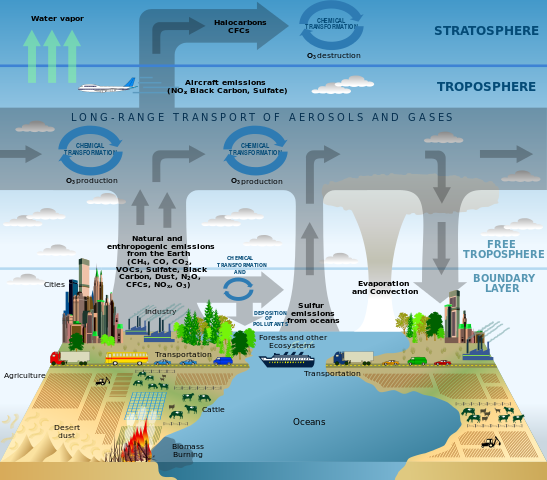

Example of a scientific model, explaining atmospheric composition based on chemical process and transport processes. Source: Strategic Plan for the U.S. Climate Change Science Program (Image by Phillipe Rekacewicz)

The very same can be said about metrics – with the grave addition that metrics have a lot less explanatory power than a model. Metrics might tell you something about the world in a quantified way, but for the how and why we need models and theories. Matters become even worse when we are talking about metrics that are generated in the social world rather than the physical world. Humans are notoriously unreliable and it is hard to pinpoint the motives behind their actions. A paper may be cited for example to confirm or refute a result, or simply to acknowledge it. A paper may be tweeted to showcase good or to condemn bad research.

In addtion, all of these measures are susceptible to gaming. According to ImpactStory, an article with just 54 Mendeley readers is already in the 94-99 percentile (thanks to Juan Gorraiz for the example). Getting your paper in the top ranks is therefore easy. And even indicators like downloads or views that go into the hundreds of thousands can probably be easily gamed with a simple script deployed on a couple of university servers around the country. This makes the old citation cartel look pretty labor-intensive, doesn’t it?

Why we still need metrics and how we can better utilize them

Don’t get me wrong: I do not think that we can come by without metrics. Science is still growing exponentially, and therefore we cannot rely on qualitative evaluation alone. There are just too many papers published, too many applications for tenure track positions submitted and too many journals and conferences launched each day. In order to address the concerns raised above, however, we need to get away from a single number determining the worth of an article, a publication, or a researcher.

One way to do this would be a more sophisticated evaluation system that is based on many different metrics, and that gives context to these metrics. This would require that we work towards getting a better understanding of how and why measures are generated and how they relate to each other. In analogy to the models, we have to find those numbers that give us a good picture of the many facets of a paper – the useful ones.

As I have argued before, visualization would be a good way to represent the different dimensions of a paper and its context. Furthermore, the way the metrics are generated must be open and transparent to make gaming of the system more difficult, and to expose the biases that are inherent in humanly created data. Last, and probably most crucial, we, the researchers and the research evaluators must critically review the metrics that are served to us.

Altmetrics do not only give us new tools for evaluation, their introduction also presents us with the opportunity to revisit academic evaluation as such – let’s seize this opportunity!

[…] All metrics are wrong, but some are useful – Sehr nachvollziehbarer Post von Peter Kraker, dass auch alternative Metriken wie Altmetrics immer auch eine Verzerrung der Realität bedeuten. Vielmehr sollte es das Ziel sein (auch durch die Verknüpfung mehrere Metriken) den Kontext mit zu berücksichtigen. Die Idee eines visuellen Ansatzes finde ich zumindest spannend. […]

[…] This is a reblog from the OKFN Science Blog. As part of my duties as a Panton Fellow, I will be regularly blogging there about my activities […]

[…] sind offene und transparente Metriken so wichtig? Dahinter steckt der Gedanke von “All Models are wrong, but some are […]

“metrics are generated must be open and transparent to make gaming of the system more difficult, and to expose the biases that are inherent in humanly created data”

Very true. Understanding the proxy nature of data (and how well or questionably the proxy fits) is important.

http://management.curiouscatblog.net/2004/08/29/dangers-of-forgetting-proxy-nature-of-data/

Data can’t lie http://management.curiouscatblog.net/2007/08/09/data-cant-lie/ but we often make it easy for others to mislead us when we don’t understand (or question) what the data really means (what operational definitions were used in the collection, etc.).

[…] blog post entitled “All metrics are wrong, but some are useful” sums up my views on (alt)metrics: I argue that no single number can determine the worth of an […]